audioLIME: Listenable Explanations Using Source Separation

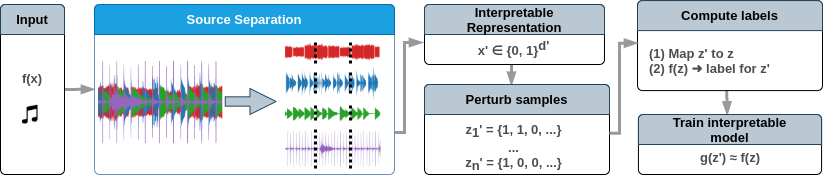

Deep neural networks (DNNs) are successfully applied in a wide variety of music information retrieval (MIR) tasks but their predictions are usually not interpretable. We propose audioLIME, a method based on Local Interpretable Model-agnostic Explanations (LIME) extended by a musical definition of locality. The perturbations used in LIME are created by switching on/off components extracted by source separation which makes our explanations listenable. We validate audioLIME on two different music tagging systems and show that it produces sensible explanations in situations where a competing method cannot.

PDF Abstract