Attention-Guided Lightweight Network for Real-Time Segmentation of Robotic Surgical Instruments

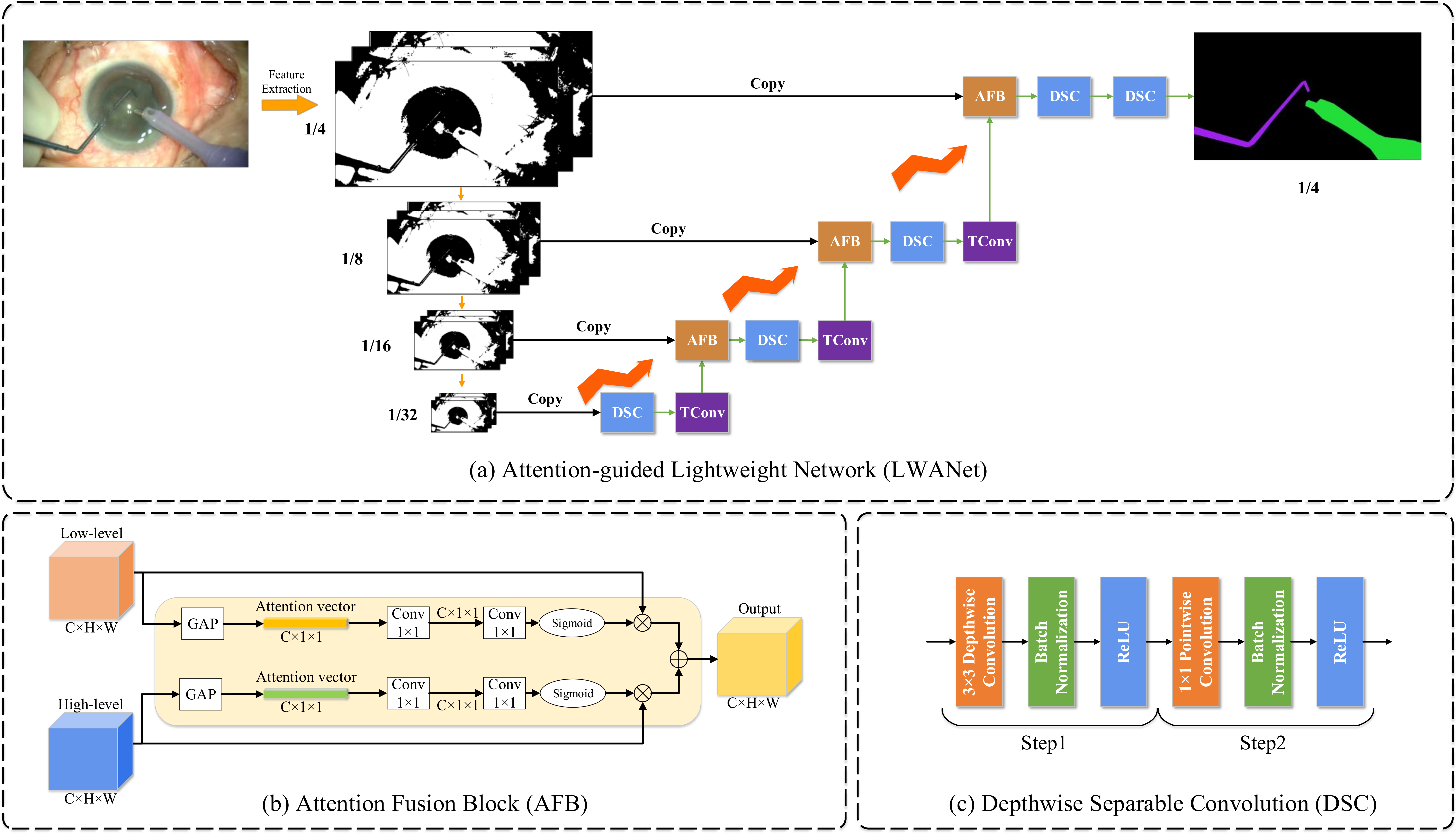

The real-time segmentation of surgical instruments plays a crucial role in robot-assisted surgery. However, it is still a challenging task to implement deep learning models to do real-time segmentation for surgical instruments due to their high computational costs and slow inference speed. In this paper, we propose an attention-guided lightweight network (LWANet), which can segment surgical instruments in real-time. LWANet adopts encoder-decoder architecture, where the encoder is the lightweight network MobileNetV2, and the decoder consists of depthwise separable convolution, attention fusion block, and transposed convolution. Depthwise separable convolution is used as the basic unit to construct the decoder, which can reduce the model size and computational costs. Attention fusion block captures global contexts and encodes semantic dependencies between channels to emphasize target regions, contributing to locating the surgical instrument. Transposed convolution is performed to upsample feature maps for acquiring refined edges. LWANet can segment surgical instruments in real-time while takes little computational costs. Based on 960*544 inputs, its inference speed can reach 39 fps with only 3.39 GFLOPs. Also, it has a small model size and the number of parameters is only 2.06 M. The proposed network is evaluated on two datasets. It achieves state-of-the-art performance 94.10% mean IOU on Cata7 and obtains a new record on EndoVis 2017 with a 4.10% increase on mean IOU.

PDF Abstract