ADAHESSIAN: An Adaptive Second Order Optimizer for Machine Learning

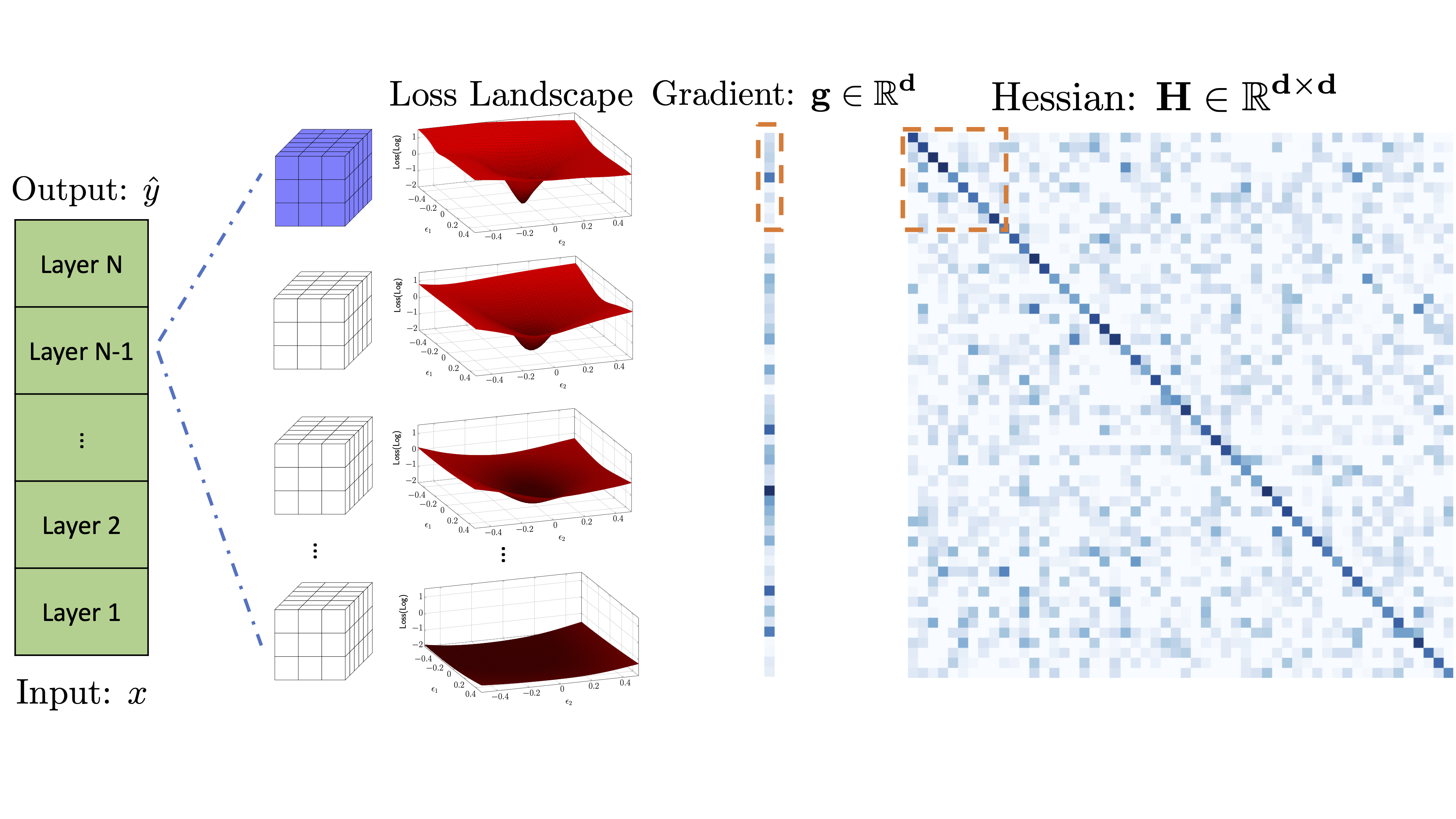

We introduce ADAHESSIAN, a second order stochastic optimization algorithm which dynamically incorporates the curvature of the loss function via ADAptive estimates of the HESSIAN. Second order algorithms are among the most powerful optimization algorithms with superior convergence properties as compared to first order methods such as SGD and Adam. The main disadvantage of traditional second order methods is their heavier per iteration computation and poor accuracy as compared to first order methods. To address these, we incorporate several novel approaches in ADAHESSIAN, including: (i) a fast Hutchinson based method to approximate the curvature matrix with low computational overhead; (ii) a root-mean-square exponential moving average to smooth out variations of the Hessian diagonal across different iterations; and (iii) a block diagonal averaging to reduce the variance of Hessian diagonal elements. We show that ADAHESSIAN achieves new state-of-the-art results by a large margin as compared to other adaptive optimization methods, including variants of Adam. In particular, we perform extensive tests on CV, NLP, and recommendation system tasks and find that ADAHESSIAN: (i) achieves 1.80%/1.45% higher accuracy on ResNets20/32 on Cifar10, and 5.55% higher accuracy on ImageNet as compared to Adam; (ii) outperforms AdamW for transformers by 0.13/0.33 BLEU score on IWSLT14/WMT14 and 2.7/1.0 PPL on PTB/Wikitext-103; (iii) outperforms AdamW for SqueezeBert by 0.41 points on GLUE; and (iv) achieves 0.032% better score than Adagrad for DLRM on the Criteo Ad Kaggle dataset. Importantly, we show that the cost per iteration of ADAHESSIAN is comparable to first order methods, and that it exhibits robustness towards its hyperparameters.

PDF Abstract

WikiText-2

WikiText-2

WikiText-103

WikiText-103