Active Learning on a Budget: Opposite Strategies Suit High and Low Budgets

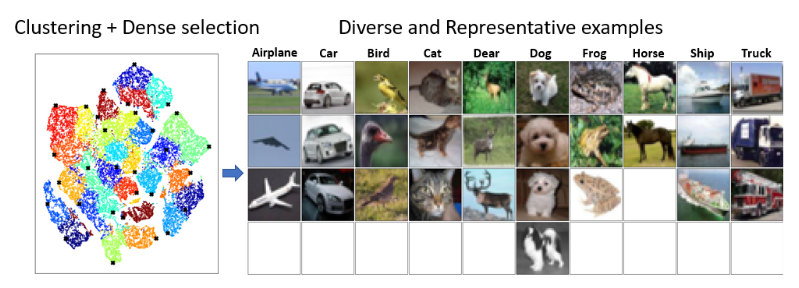

Investigating active learning, we focus on the relation between the number of labeled examples (budget size), and suitable querying strategies. Our theoretical analysis shows a behavior reminiscent of phase transition: typical examples are best queried when the budget is low, while unrepresentative examples are best queried when the budget is large. Combined evidence shows that a similar phenomenon occurs in common classification models. Accordingly, we propose TypiClust -- a deep active learning strategy suited for low budgets. In a comparative empirical investigation of supervised learning, using a variety of architectures and image datasets, TypiClust outperforms all other active learning strategies in the low-budget regime. Using TypiClust in the semi-supervised framework, performance gets an even more significant boost. In particular, state-of-the-art semi-supervised methods trained on CIFAR-10 with 10 labeled examples selected by TypiClust, reach 93.2% accuracy -- an improvement of 39.4% over random selection. Code is available at https://github.com/avihu111/TypiClust.

PDF AbstractCode

Tasks

Datasets

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Benchmark |

|---|---|---|---|---|---|---|

| Active Learning | CIFAR10 (10,000) | TypiClust | Accuracy | 93.2 | # 1 |

CIFAR-10

CIFAR-10

ImageNet

ImageNet

CIFAR-100

CIFAR-100

Tiny ImageNet

Tiny ImageNet