Abstract Reasoning with Distracting Features

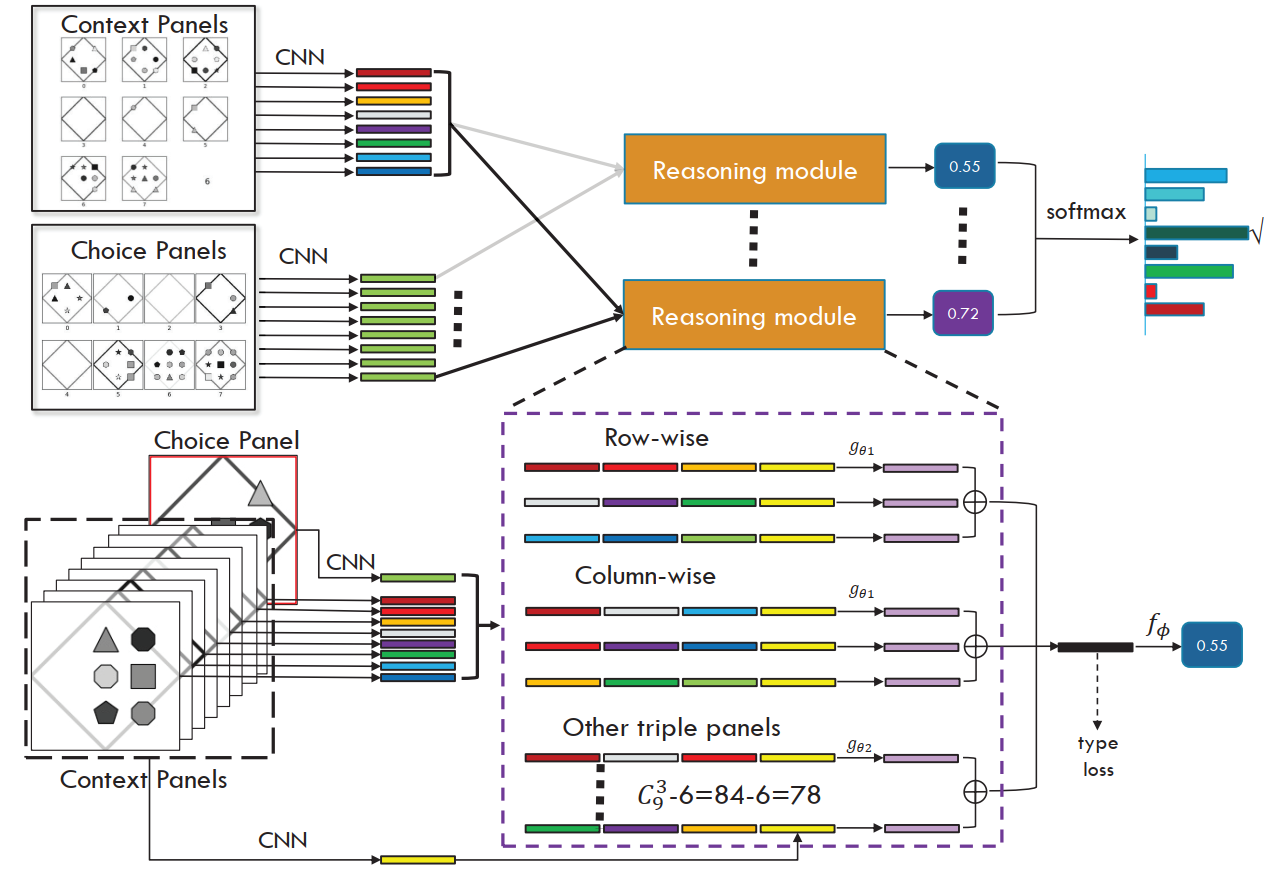

Abstraction reasoning is a long-standing challenge in artificial intelligence. Recent studies suggest that many of the deep architectures that have triumphed over other domains failed to work well in abstract reasoning. In this paper, we first illustrate that one of the main challenges in such a reasoning task is the presence of distracting features, which requires the learning algorithm to leverage counterevidence and to reject any of the false hypotheses in order to learn the true patterns. We later show that carefully designed learning trajectory over different categories of training data can effectively boost learning performance by mitigating the impacts of distracting features. Inspired by this fact, we propose feature robust abstract reasoning (FRAR) model, which consists of a reinforcement learning based teacher network to determine the sequence of training and a student network for predictions. Experimental results demonstrated strong improvements over baseline algorithms and we are able to beat the state-of-the-art models by 18.7% in the RAVEN dataset and 13.3% in the PGM dataset.

PDF Abstract NeurIPS 2019 PDF NeurIPS 2019 Abstract

RAVEN

RAVEN

AbstractReasoning

AbstractReasoning

PGM

PGM