A Unified Query-based Paradigm for Point Cloud Understanding

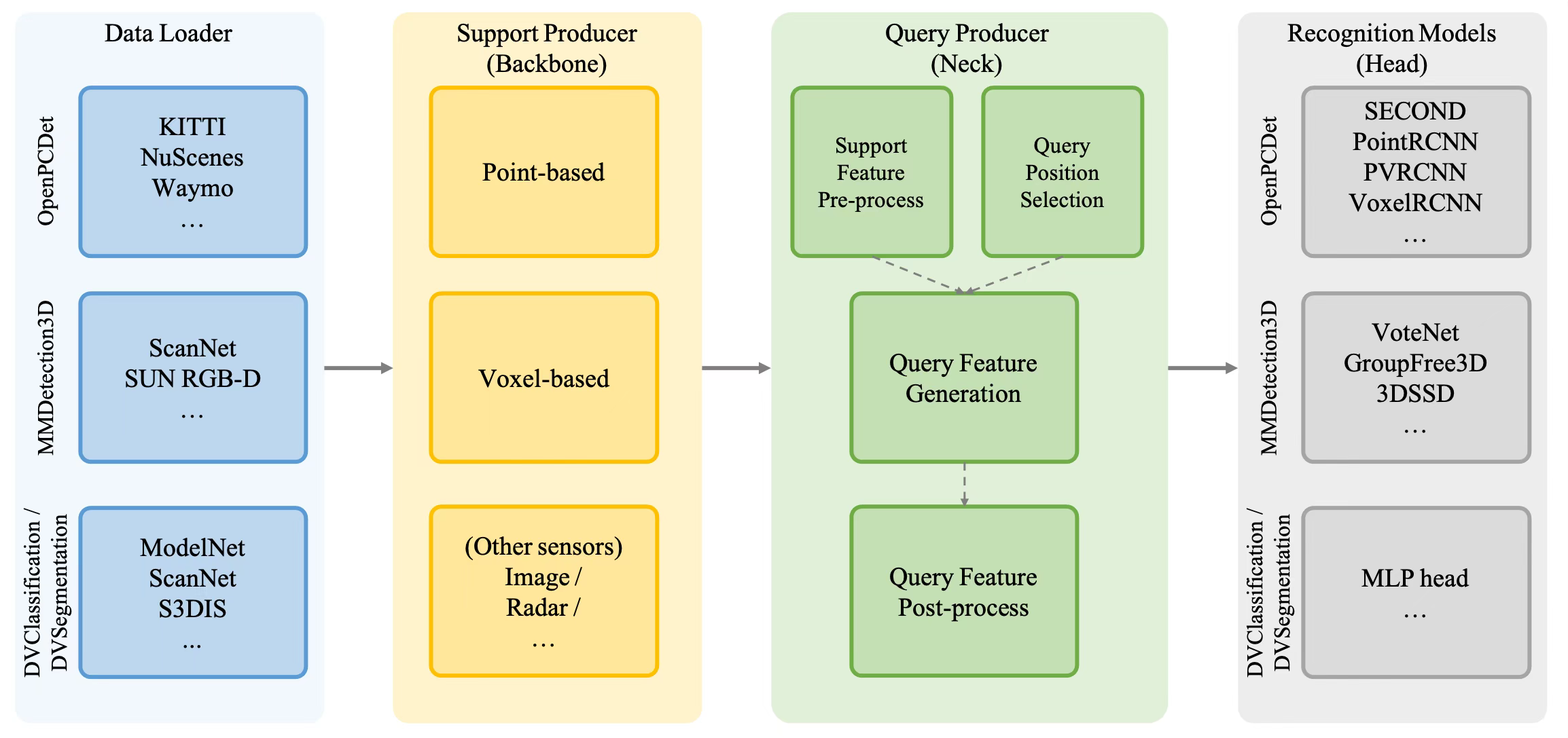

3D point cloud understanding is an important component in autonomous driving and robotics. In this paper, we present a novel Embedding-Querying paradigm (EQ- Paradigm) for 3D understanding tasks including detection, segmentation, and classification. EQ-Paradigm is a unified paradigm that enables the combination of any existing 3D backbone architectures with different task heads. Under the EQ-Paradigm, the input is firstly encoded in the embedding stage with an arbitrary feature extraction architecture, which is independent of tasks and heads. Then, the querying stage enables the encoded features to be applicable for diverse task heads. This is achieved by introducing an intermediate representation, i.e., Q-representation, in the querying stage to serve as a bridge between the embedding stage and task heads. We design a novel Q- Net as the querying stage network. Extensive experimental results on various 3D tasks, including object detection, semantic segmentation and shape classification, show that EQ-Paradigm in tandem with Q-Net is a general and effective pipeline, which enables a flexible collaboration of backbones and heads, and further boosts the performance of the state-of-the-art methods. Codes and models are available at https://github.com/dvlab-research/DeepVision3D.

PDF Abstract CVPR 2022 PDF CVPR 2022 Abstract

ssd

ssd

ScanNet

ScanNet

ModelNet

ModelNet

SUN RGB-D

SUN RGB-D

S3DIS

S3DIS