A Qualitative Evaluation of Language Models on Automatic Question-Answering for COVID-19

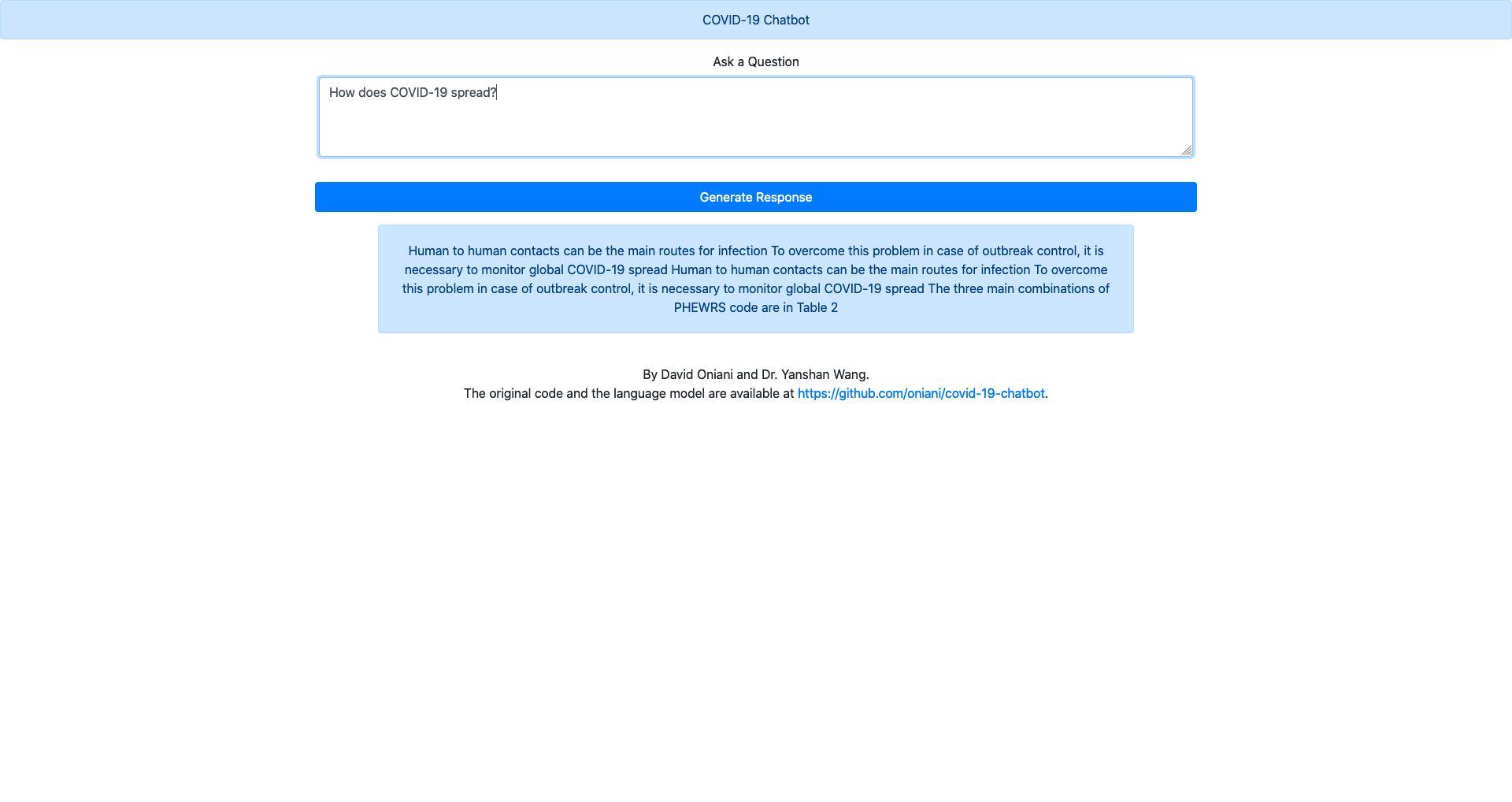

COVID-19 has resulted in an ongoing pandemic and as of 12 June 2020, has caused more than 7.4 million cases and over 418,000 deaths. The highly dynamic and rapidly evolving situation with COVID-19 has made it difficult to access accurate, on-demand information regarding the disease. Online communities, forums, and social media provide potential venues to search for relevant questions and answers, or post questions and seek answers from other members. However, due to the nature of such sites, there are always a limited number of relevant questions and responses to search from, and posted questions are rarely answered immediately. With the advancements in the field of natural language processing, particularly in the domain of language models, it has become possible to design chatbots that can automatically answer consumer questions. However, such models are rarely applied and evaluated in the healthcare domain, to meet the information needs with accurate and up-to-date healthcare data. In this paper, we propose to apply a language model for automatically answering questions related to COVID-19 and qualitatively evaluate the generated responses. We utilized the GPT-2 language model and applied transfer learning to retrain it on the COVID-19 Open Research Dataset (CORD-19) corpus. In order to improve the quality of the generated responses, we applied 4 different approaches, namely tf-idf, BERT, BioBERT, and USE to filter and retain relevant sentences in the responses. In the performance evaluation step, we asked two medical experts to rate the responses. We found that BERT and BioBERT, on average, outperform both tf-idf and USE in relevance-based sentence filtering tasks. Additionally, based on the chatbot, we created a user-friendly interactive web application to be hosted online.

PDF Abstract