An End-to-End Joint Learning Scheme of Image Compression and Quality Enhancement with Improved Entropy Minimization

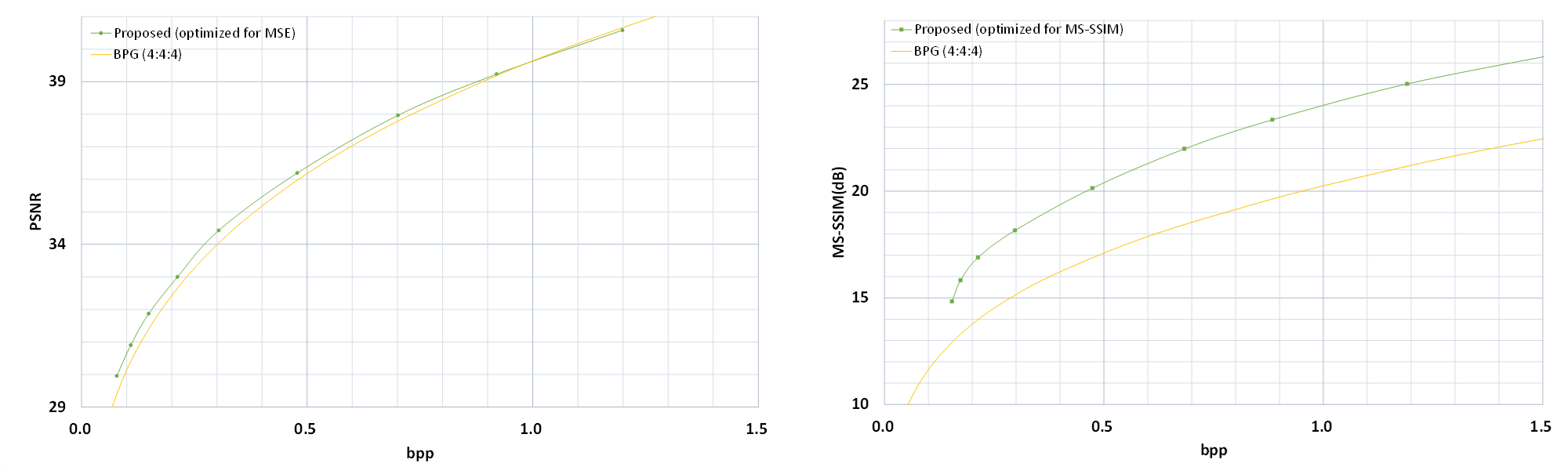

Recently, learned image compression methods have been actively studied. Among them, entropy-minimization based approaches have achieved superior results compared to conventional image codecs such as BPG and JPEG2000. However, the quality enhancement and rate-minimization are conflictively coupled in the process of image compression. That is, maintaining high image quality entails less compression and vice versa. However, by jointly training separate quality enhancement in conjunction with image compression, the coding efficiency can be improved. In this paper, we propose a novel joint learning scheme of image compression and quality enhancement, called JointIQ-Net, as well as entropy model improvement, thus achieving significantly improved coding efficiency against the previous methods. Our proposed JointIQ-Net combines an image compression sub-network and a quality enhancement sub-network in a cascade, both of which are end-to-end trained in a combined manner within the JointIQ-Net. Also the JointIQ-Net benefits from improved entropy-minimization that newly adopts a Gussian Mixture Model (GMM) and further exploits global context to estimate the probabilities of latent representations. In order to show the effectiveness of our proposed JointIQ-Net, extensive experiments have been performed, and showed that the JointIQ-Net achieves a remarkable performance improvement in coding efficiency in terms of both PSNR and MS-SSIM, compared to the previous learned image compression methods and the conventional codecs such as VVC Intra (VTM 7.1), BPG, and JPEG2000. To the best of our knowledge, this is the first end-to-end optimized image compression method that outperforms VTM 7.1 (Intra), the latest reference software of the VVC standard, in terms of the PSNR and MS-SSIM.

PDF Abstract