3D-CODED : 3D Correspondences by Deep Deformation

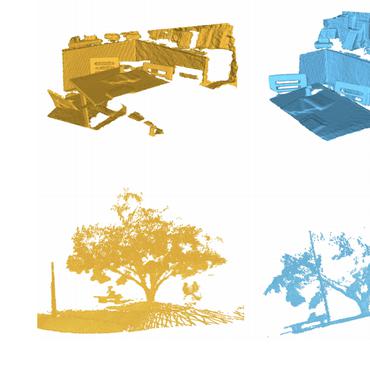

We present a new deep learning approach for matching deformable shapes by introducing {\it Shape Deformation Networks} which jointly encode 3D shapes and correspondences. This is achieved by factoring the surface representation into (i) a template, that parameterizes the surface, and (ii) a learnt global feature vector that parameterizes the transformation of the template into the input surface. By predicting this feature for a new shape, we implicitly predict correspondences between this shape and the template. We show that these correspondences can be improved by an additional step which improves the shape feature by minimizing the Chamfer distance between the input and transformed template. We demonstrate that our simple approach improves on state-of-the-art results on the difficult FAUST-inter challenge, with an average correspondence error of 2.88cm. We show, on the TOSCA dataset, that our method is robust to many types of perturbations, and generalizes to non-human shapes. This robustness allows it to perform well on real unclean, meshes from the the SCAPE dataset.

PDF AbstractCode

Results from the Paper

Ranked #9 on

3D Dense Shape Correspondence

on SHREC'19

(using extra training data)

Ranked #9 on

3D Dense Shape Correspondence

on SHREC'19

(using extra training data)

FAUST

FAUST

SURREAL

SURREAL

SHREC'19

SHREC'19