Exploring the Capacity of an Orderless Box Discretization Network for Multi-orientation Scene Text Detection

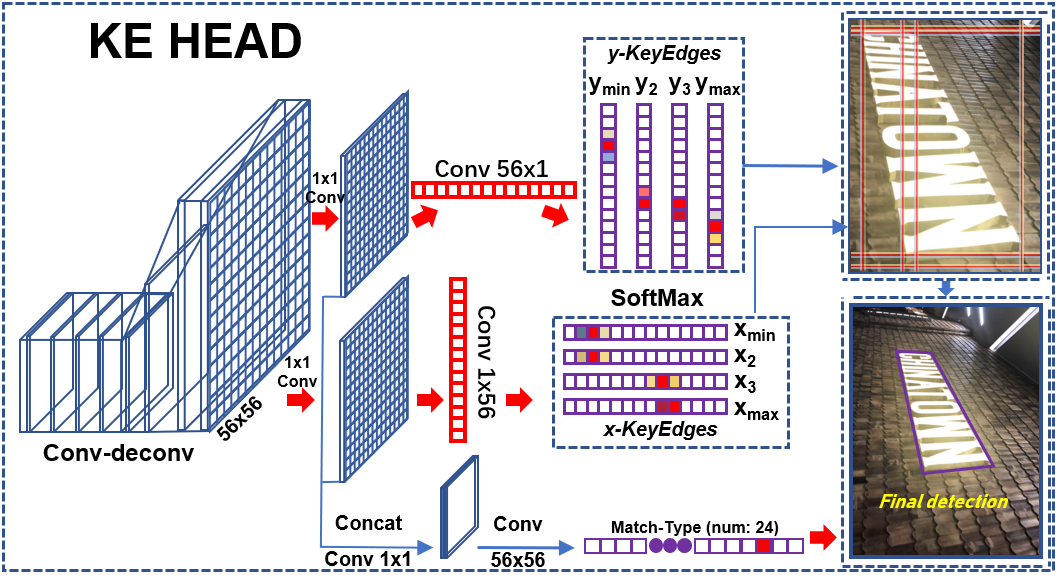

Multi-orientation scene text detection has recently gained significant research attention. Previous methods directly predict words or text lines, typically by using quadrilateral shapes. However, many of these methods neglect the significance of consistent labeling, which is important for maintaining a stable training process, especially when it comprises a large amount of data. Here we solve this problem by proposing a new method, Orderless Box Discretization (OBD), which first discretizes the quadrilateral box into several key edges containing all potential horizontal and vertical positions. To decode accurate vertex positions, a simple yet effective matching procedure is proposed for reconstructing the quadrilateral bounding boxes. Our method solves the ambiguity issue, which has a significant impact on the learning process. Extensive ablation studies are conducted to validate the effectiveness of our proposed method quantitatively. More importantly, based on OBD, we provide a detailed analysis of the impact of a collection of refinements, which may inspire others to build state-of-the-art text detectors. Combining both OBD and these useful refinements, we achieve state-of-the-art performance on various benchmarks, including ICDAR 2015 and MLT. Our method also won the first place in the text detection task at the recent ICDAR2019 Robust Reading Challenge for Reading Chinese Text on Signboards, further demonstrating its superior performance. The code is available at https://git.io/TextDet.

PDF Abstract

ICDAR 2017

ICDAR 2017