Learning Representations by Maximizing Mutual Information Across Views

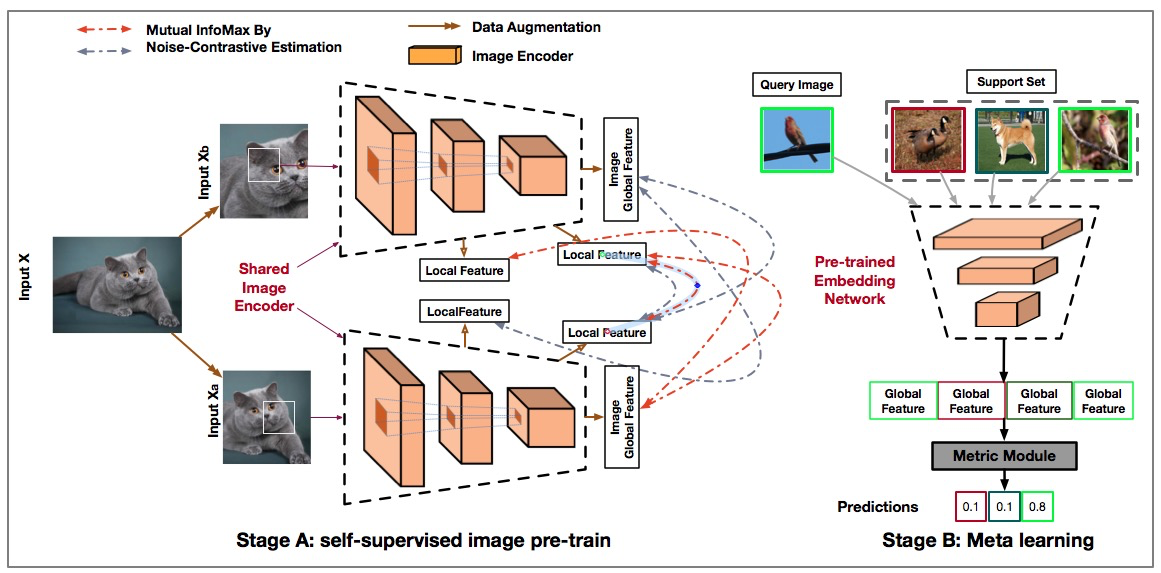

We propose an approach to self-supervised representation learning based on maximizing mutual information between features extracted from multiple views of a shared context. For example, one could produce multiple views of a local spatio-temporal context by observing it from different locations (e.g., camera positions within a scene), and via different modalities (e.g., tactile, auditory, or visual). Or, an ImageNet image could provide a context from which one produces multiple views by repeatedly applying data augmentation. Maximizing mutual information between features extracted from these views requires capturing information about high-level factors whose influence spans multiple views -- e.g., presence of certain objects or occurrence of certain events. Following our proposed approach, we develop a model which learns image representations that significantly outperform prior methods on the tasks we consider. Most notably, using self-supervised learning, our model learns representations which achieve 68.1% accuracy on ImageNet using standard linear evaluation. This beats prior results by over 12% and concurrent results by 7%. When we extend our model to use mixture-based representations, segmentation behaviour emerges as a natural side-effect. Our code is available online: https://github.com/Philip-Bachman/amdim-public.

PDF Abstract NeurIPS 2019 PDF NeurIPS 2019 Abstract

ImageNet

ImageNet

STL-10

STL-10

Places205

Places205