Transformers

Transformers

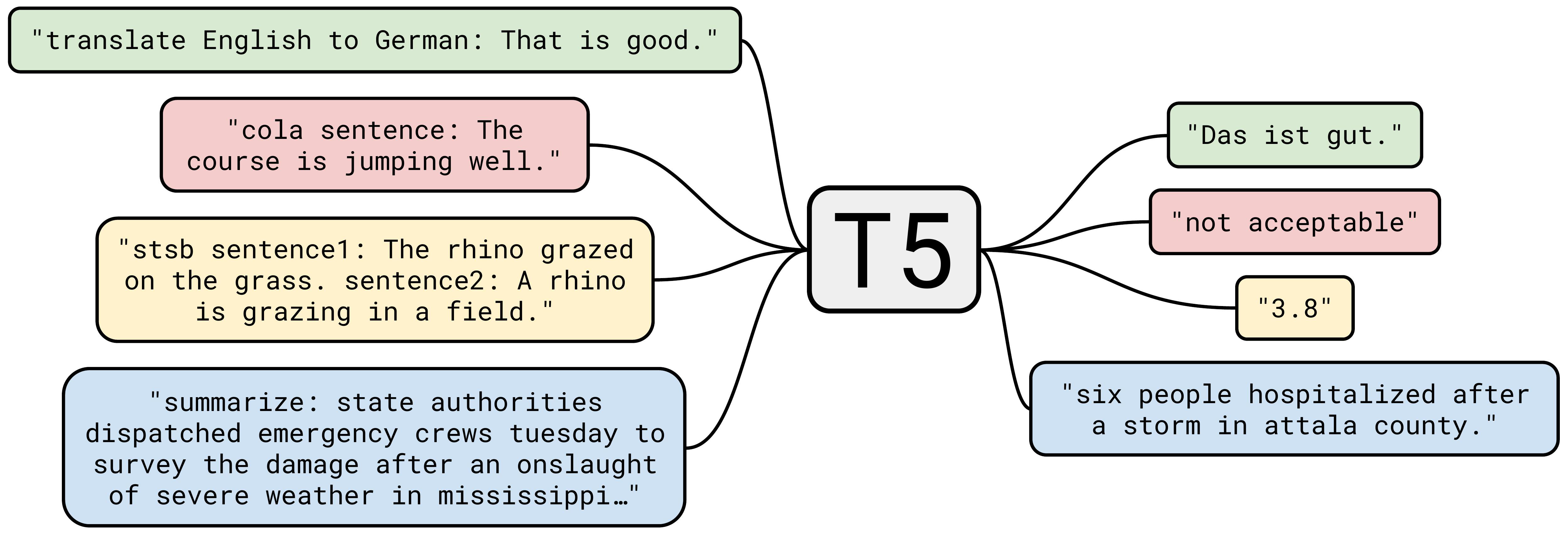

T5

Introduced by Raffel et al. in Exploring the Limits of Transfer Learning with a Unified Text-to-Text TransformerT5, or Text-to-Text Transfer Transformer, is a Transformer based architecture that uses a text-to-text approach. Every task – including translation, question answering, and classification – is cast as feeding the model text as input and training it to generate some target text. This allows for the use of the same model, loss function, hyperparameters, etc. across our diverse set of tasks. The changes compared to BERT include:

- adding a causal decoder to the bidirectional architecture.

- replacing the fill-in-the-blank cloze task with a mix of alternative pre-training tasks.

Papers

| Paper | Code | Results | Date | Stars |

|---|

Tasks

| Task | Papers | Share |

|---|---|---|

| Language Modelling | 97 | 9.68% |

| Question Answering | 65 | 6.49% |

| Text Generation | 47 | 4.69% |

| Sentence | 44 | 4.39% |

| Translation | 32 | 3.19% |

| Retrieval | 30 | 2.99% |

| Machine Translation | 26 | 2.59% |

| Natural Language Understanding | 20 | 2.00% |

| Semantic Parsing | 19 | 1.90% |

Adafactor

Adafactor

Attention Dropout

Attention Dropout

Dense Connections

Dense Connections

Dropout

Dropout

GELU

GELU

GLU

GLU

Inverse Square Root Schedule

Inverse Square Root Schedule

Layer Normalization

Layer Normalization

Multi-Head Attention

Multi-Head Attention

Residual Connection

Residual Connection

Scaled Dot-Product Attention

Scaled Dot-Product Attention

SentencePiece

SentencePiece

Softmax

Softmax