Interpretability

Interpretability

Symbolic Deep Learning

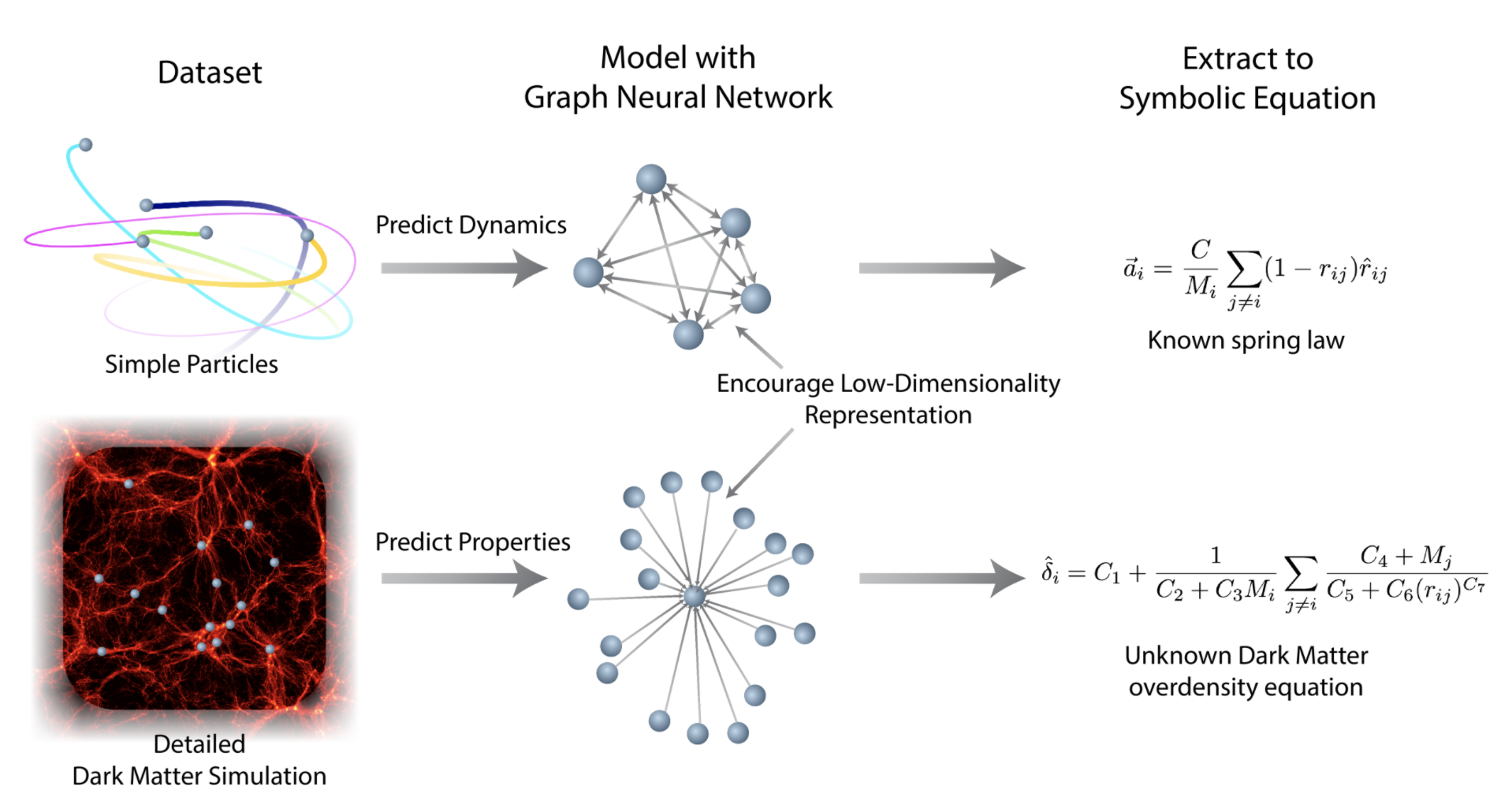

Introduced by Cranmer et al. in Discovering Symbolic Models from Deep Learning with Inductive BiasesThis is a general approach to convert a neural network into an analytic equation. The technique works as follows:

- Encourage sparse latent representations

- Apply symbolic regression to approximate the transformations between in/latent/out layers

- Compose the symbolic expressions.

In the paper, we show that we find the correct known equations, including force laws and Hamiltonians, can be extracted from the neural network. We then apply our method to a non-trivial cosmology example-a detailed dark matter simulation-and discover a new analytic formula which can predict the concentration of dark matter from the mass distribution of nearby cosmic structures. The symbolic expressions extracted from the GNN using our technique also generalized to out-of-distribution data better than the GNN itself. Our approach offers alternative directions for interpreting neural networks and discovering novel physical principles from the representations they learn.

Source: Discovering Symbolic Models from Deep Learning with Inductive BiasesPapers

| Paper | Code | Results | Date | Stars |

|---|

Usage Over Time

Components

| Component | Type |

|

|---|---|---|

| 🤖 No Components Found | You can add them if they exist; e.g. Mask R-CNN uses RoIAlign |