Attention Patterns

Attention Patterns

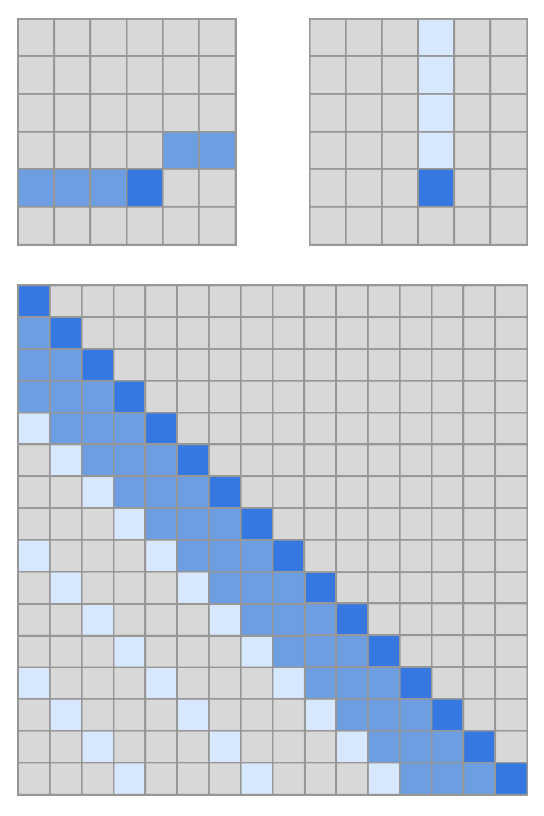

Strided Attention

Introduced by Child et al. in Generating Long Sequences with Sparse TransformersStrided Attention is a factorized attention pattern that has one head attend to the previous $l$ locations, and the other head attend to every $l$th location, where $l$ is the stride and chosen to be close to $\sqrt{n}$. It was proposed as part of the Sparse Transformer architecture.

A self-attention layer maps a matrix of input embeddings $X$ to an output matrix and is parameterized by a connectivity pattern $S = \text{set}\left(S_{1}, \dots, S_{n}\right)$, where $S_{i}$ denotes the set of indices of the input vectors to which the $i$th output vector attends. The output vector is a weighted sum of transformations of the input vectors:

$$ \text{Attend}\left(X, S\right) = \left(a\left(\mathbf{x}_{i}, S_{i}\right)\right)_{i\in\text{set}\left(1,\dots,n\right)}$$

$$ a\left(\mathbf{x}_{i}, S_{i}\right) = \text{softmax}\left(\frac{\left(W_{q}\mathbf{x}_{i}\right)K^{T}_{S_{i}}}{\sqrt{d}}\right)V_{S_{i}} $$

$$ K_{Si} = \left(W_{k}\mathbf{x}_{j}\right)_{j\in{S_{i}}} $$

$$ V_{Si} = \left(W_{v}\mathbf{x}_{j}\right)_{j\in{S_{i}}} $$

Here $W_{q}$, $W_{k}$, and $W_{v}$ represent the weight matrices which transform a given $x_{i}$ into a query, key, or value, and $d$ is the inner dimension of the queries and keys. The output at each position is a sum of the values weighted by the scaled dot-product similarity of the keys and queries.

Full self-attention for autoregressive models defines $S_{i} = \text{set}\left(j : j \leq i\right)$, allowing every element to attend to all previous positions and its own position.

Factorized self-attention instead has $p$ separate attention heads, where the $m$th head defines a subset of the indices $A_{i}^{(m)} ⊂ \text{set}\left(j : j \leq i\right)$ and lets $S_{i} = A_{i}^{(m)}$. The goal with the Sparse Transformer was to find efficient choices for the subset $A$.

Formally for Strided Attention, $A^{(1)}_{i} = ${$t, t + 1, ..., i$} for $t = \max\left(0, i − l\right)$, and $A^{(2)}_{i} = ${$j : (i − j) \mod l = 0$}. The $i$-th output vector of the attention head attends to all input vectors either from $A^{(1)}_{i}$ or $A^{(2)}_{i}$. This pattern can be visualized in the figure to the right.

This formulation is convenient if the data naturally has a structure that aligns with the stride, like images or some types of music. For data without a periodic structure, like text, however, the authors find that the network can fail to properly route information with the strided pattern, as spatial coordinates for an element do not necessarily correlate with the positions where the element may be most relevant in the future.

Source: Generating Long Sequences with Sparse TransformersPapers

| Paper | Code | Results | Date | Stars |

|---|

Tasks

| Task | Papers | Share |

|---|---|---|

| Language Modelling | 82 | 10.82% |

| Large Language Model | 49 | 6.46% |

| Question Answering | 48 | 6.33% |

| Prompt Engineering | 30 | 3.96% |

| Retrieval | 30 | 3.96% |

| Code Generation | 28 | 3.69% |

| In-Context Learning | 28 | 3.69% |

| Sentence | 23 | 3.03% |

| Benchmarking | 18 | 2.37% |

Usage Over Time

Components

| Component | Type |

|

|---|---|---|

| 🤖 No Components Found | You can add them if they exist; e.g. Mask R-CNN uses RoIAlign |