Replay Memory

Replay Memory

Prioritized Experience Replay

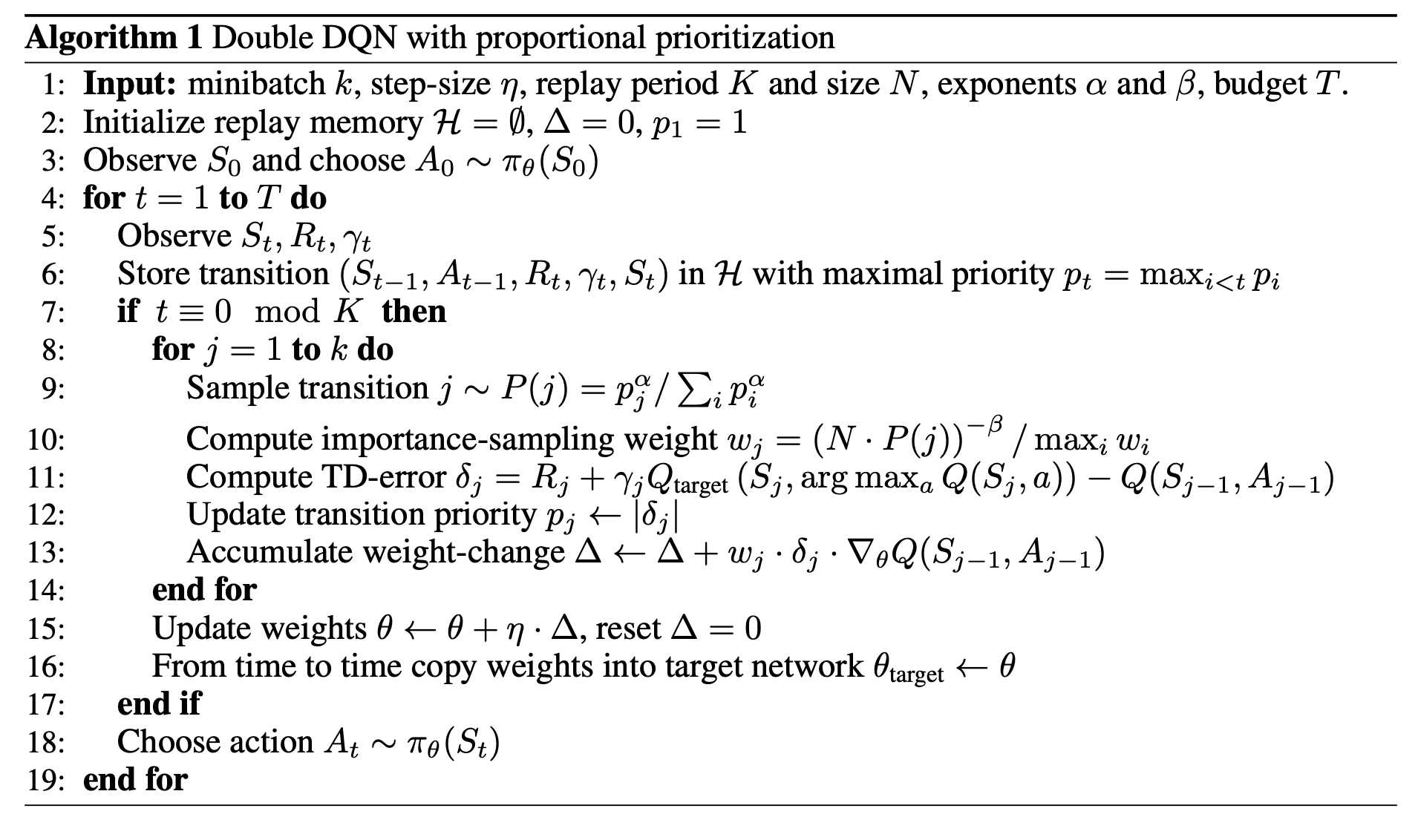

Introduced by Schaul et al. in Prioritized Experience ReplayPrioritized Experience Replay is a type of experience replay in reinforcement learning where we more frequently replay transitions with high expected learning progress, as measured by the magnitude of their temporal-difference (TD) error. This prioritization can lead to a loss of diversity, which is alleviated with stochastic prioritization, and introduce bias, which can be corrected with importance sampling.

The stochastic sampling method interpolates between pure greedy prioritization and uniform random sampling. The probability of being sampled is ensured to be monotonic in a transition's priority, while guaranteeing a non-zero probability even for the lowest-priority transition. Concretely, define the probability of sampling transition $i$ as

$$P(i) = \frac{p_i^{\alpha}}{\sum_k p_k^{\alpha}}$$

where $p_i > 0$ is the priority of transition $i$. The exponent $\alpha$ determines how much prioritization is used, with $\alpha=0$ corresponding to the uniform case.

Prioritized replay introduces bias because it changes this distribution in an uncontrolled fashion, and therefore changes the solution that the estimates will converge to. We can correct this bias by using importance-sampling (IS) weights:

$$ w_{i} = \left(\frac{1}{N}\cdot\frac{1}{P\left(i\right)}\right)^{\beta} $$

that fully compensates for the non-uniform probabilities $P\left(i\right)$ if $\beta = 1$. These weights can be folded into the Q-learning update by using $w_{i}\delta_{i}$ instead of $\delta_{i}$ - weighted IS rather than ordinary IS. For stability reasons, we always normalize weights by $1/\max_{i}w_{i}$ so that they only scale the update downwards.

The two types of prioritization are proportional based, where $p_{i} = |\delta_{i}| + \epsilon$ and rank-based, where $p_{i} = \frac{1}{\text{rank}\left(i\right)}$, the latter where $\text{rank}\left(i\right)$ is the rank of transition $i$ when the replay memory is sorted according to |$\delta_{i}$|, For proportional based, hyperparameters used were $\alpha = 0.7$, $\beta_{0} = 0.5$. For the rank-based variant, hyperparameters used were $\alpha = 0.6$, $\beta_{0} = 0.4$.

Source: Prioritized Experience ReplayPapers

| Paper | Code | Results | Date | Stars |

|---|

Tasks

| Task | Papers | Share |

|---|---|---|

| Reinforcement Learning (RL) | 67 | 32.68% |

| Model-based Reinforcement Learning | 18 | 8.78% |

| Atari Games | 14 | 6.83% |

| Continuous Control | 14 | 6.83% |

| OpenAI Gym | 8 | 3.90% |

| Decision Making | 8 | 3.90% |

| Multi-agent Reinforcement Learning | 6 | 2.93% |

| Board Games | 6 | 2.93% |

| Starcraft | 5 | 2.44% |

Usage Over Time

Components

| Component | Type |

|

|---|---|---|

| 🤖 No Components Found | You can add them if they exist; e.g. Mask R-CNN uses RoIAlign |