Language Models

Language Models

Neural Probabilistic Language Model

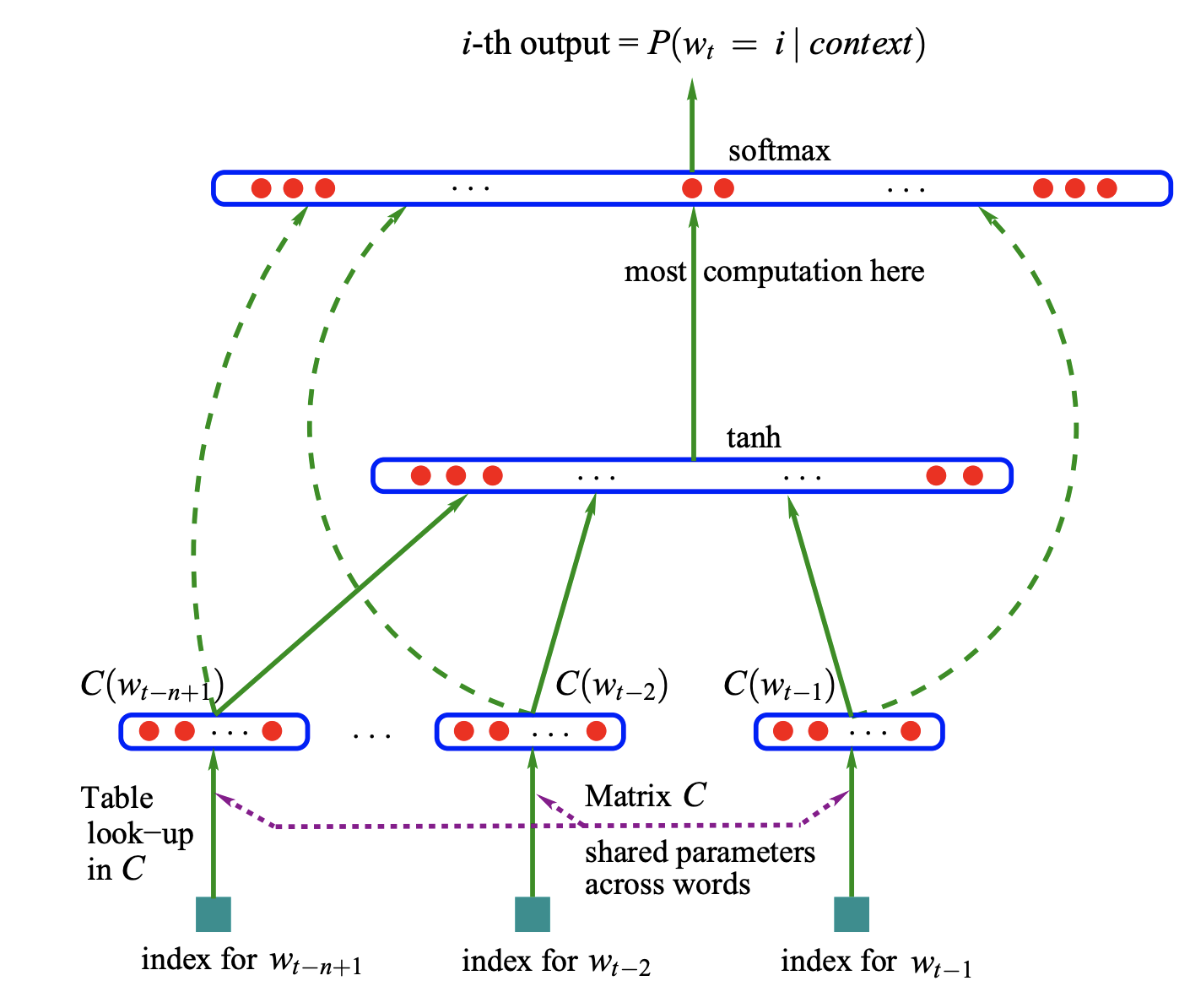

A Neural Probablistic Language Model is an early language modelling architecture. It involves a feedforward architecture that takes in input vector representations (i.e. word embeddings) of the previous $n$ words, which are looked up in a table $C$.

The word embeddings are concatenated and fed into a hidden layer which then feeds into a softmax layer to estimate the probability of the word given the context.

Papers

| Paper | Code | Results | Date | Stars |

|---|

Tasks

| Task | Papers | Share |

|---|---|---|

| Language Modelling | 5 | 31.25% |

| Machine Translation | 2 | 12.50% |

| Translation | 2 | 12.50% |

| Text Generation | 1 | 6.25% |

| Graph Embedding | 1 | 6.25% |

| Knowledge Graph Embedding | 1 | 6.25% |

| Knowledge Graph Embeddings | 1 | 6.25% |

| Type prediction | 1 | 6.25% |

| Vocal Bursts Type Prediction | 1 | 6.25% |

Usage Over Time

Components

| Component | Type |

|

|---|---|---|

| 🤖 No Components Found | You can add them if they exist; e.g. Mask R-CNN uses RoIAlign |