Attention Modules

Attention Modules

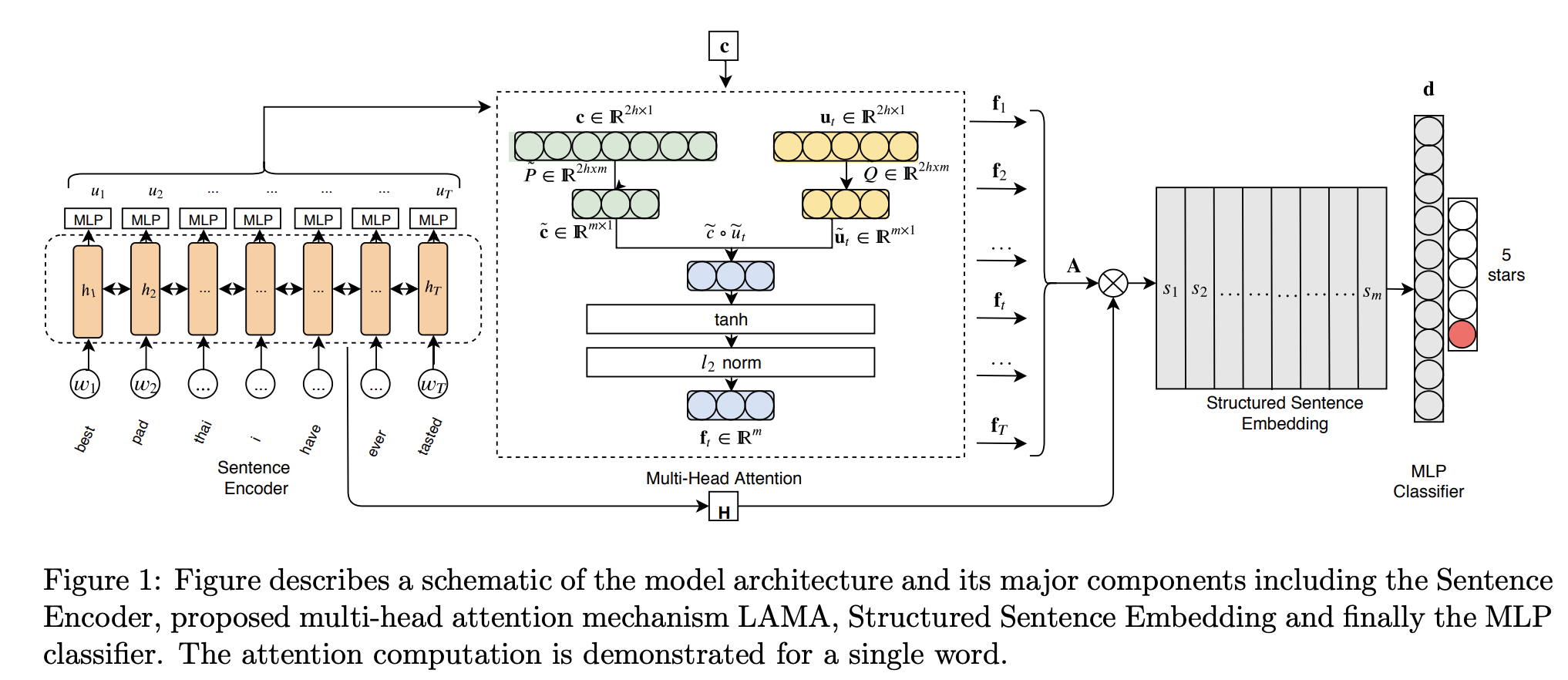

Low-Rank Factorization-based Multi-Head Attention

Introduced by Mehta et al. in Low Rank Factorization for Compact Multi-Head Self-AttentionLow-Rank Factorization-based Multi-head Attention Mechanism, or LAMA, is a type of attention module that uses low-rank factorization to reduce computational complexity. It uses low-rank bilinear pooling to construct a structured sentence representation that attends to multiple aspects of a sentence.

Source: Low Rank Factorization for Compact Multi-Head Self-AttentionPapers

| Paper | Code | Results | Date | Stars |

|---|

Tasks

| Task | Papers | Share |

|---|---|---|

| Language Modelling | 11 | 20.75% |

| Image Inpainting | 4 | 7.55% |

| In-Context Learning | 3 | 5.66% |

| Question Answering | 3 | 5.66% |

| Text Classification | 3 | 5.66% |

| Meta-Learning | 2 | 3.77% |

| Retrieval | 2 | 3.77% |

| Sentiment Analysis | 2 | 3.77% |

| Quantization | 1 | 1.89% |

Softmax

Softmax

Tanh Activation

Tanh Activation