Normalization

Normalization

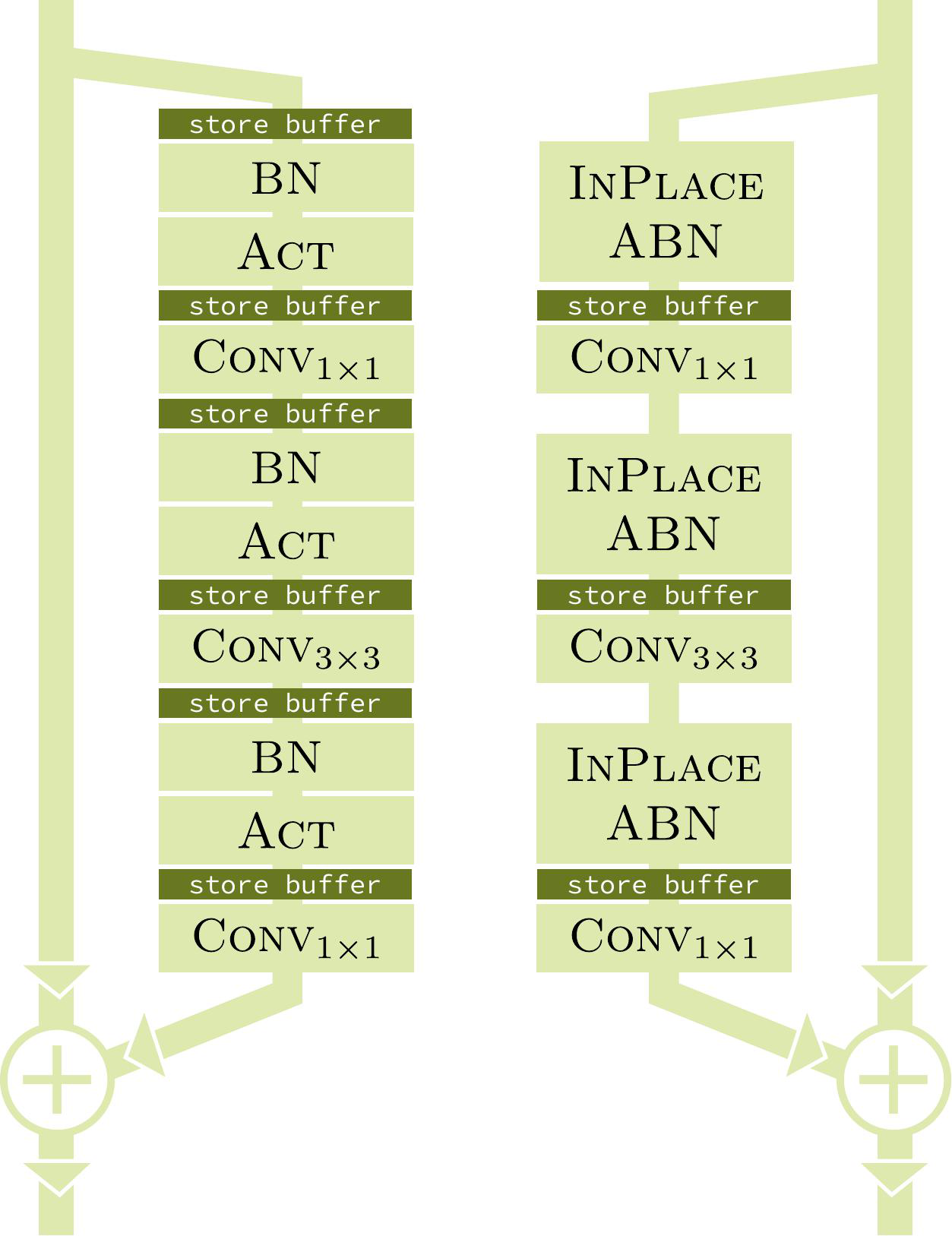

In-Place Activated Batch Normalization

Introduced by Bulò et al. in In-Place Activated BatchNorm for Memory-Optimized Training of DNNsIn-Place Activated Batch Normalization, or InPlace-ABN, substitutes the conventionally used succession of BatchNorm + Activation layers with a single plugin layer, hence avoiding invasive framework surgery while providing straightforward applicability for existing deep learning frameworks. It approximately halves the memory requirements during training of modern deep learning models.

Source: In-Place Activated BatchNorm for Memory-Optimized Training of DNNsPapers

| Paper | Code | Results | Date | Stars |

|---|

Tasks

| Task | Papers | Share |

|---|---|---|

| Image Classification | 2 | 28.57% |

| Fine-Grained Image Classification | 1 | 14.29% |

| General Classification | 1 | 14.29% |

| Multi-Label Classification | 1 | 14.29% |

| Object Detection | 1 | 14.29% |

| Semantic Segmentation | 1 | 14.29% |

Batch Normalization

Batch Normalization