Distributed Methods

Distributed Methods

GPipe

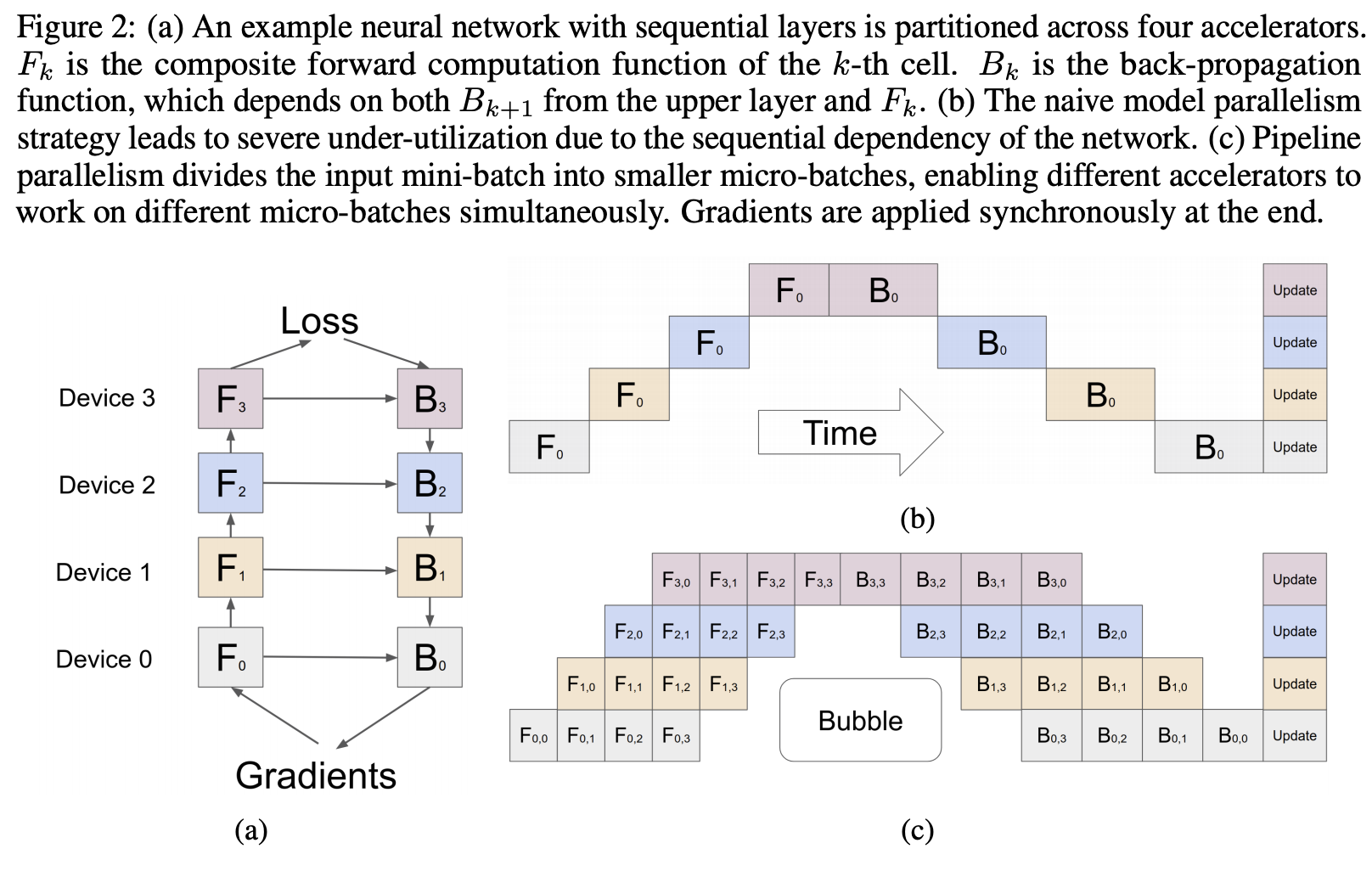

Introduced by Huang et al. in GPipe: Efficient Training of Giant Neural Networks using Pipeline ParallelismGPipe is a distributed model parallel method for neural networks. With GPipe, each model can be specified as a sequence of layers, and consecutive groups of layers can be partitioned into cells. Each cell is then placed on a separate accelerator. Based on this partitioned setup, batch splitting is applied. A mini-batch of training examples is split into smaller micro-batches, then the execution of each set of micro-batches is pipelined over cells. Synchronous mini-batch gradient descent is applied for training, where gradients are accumulated across all micro-batches in a mini-batch and applied at the end of a mini-batch.

Source: GPipe: Efficient Training of Giant Neural Networks using Pipeline ParallelismPapers

| Paper | Code | Results | Date | Stars |

|---|

Tasks

| Task | Papers | Share |

|---|---|---|

| Image Classification | 2 | 16.67% |

| Machine Translation | 2 | 16.67% |

| Sentiment Analysis | 1 | 8.33% |

| Language Modelling | 1 | 8.33% |

| graph partitioning | 1 | 8.33% |

| BIG-bench Machine Learning | 1 | 8.33% |

| Link Prediction | 1 | 8.33% |

| Protein Folding | 1 | 8.33% |

| Fine-Grained Image Classification | 1 | 8.33% |

Usage Over Time

Components

| Component | Type |

|

|---|---|---|

| 🤖 No Components Found | You can add them if they exist; e.g. Mask R-CNN uses RoIAlign |