Policy Gradient Methods

Policy Gradient Methods

Fisher-BRC

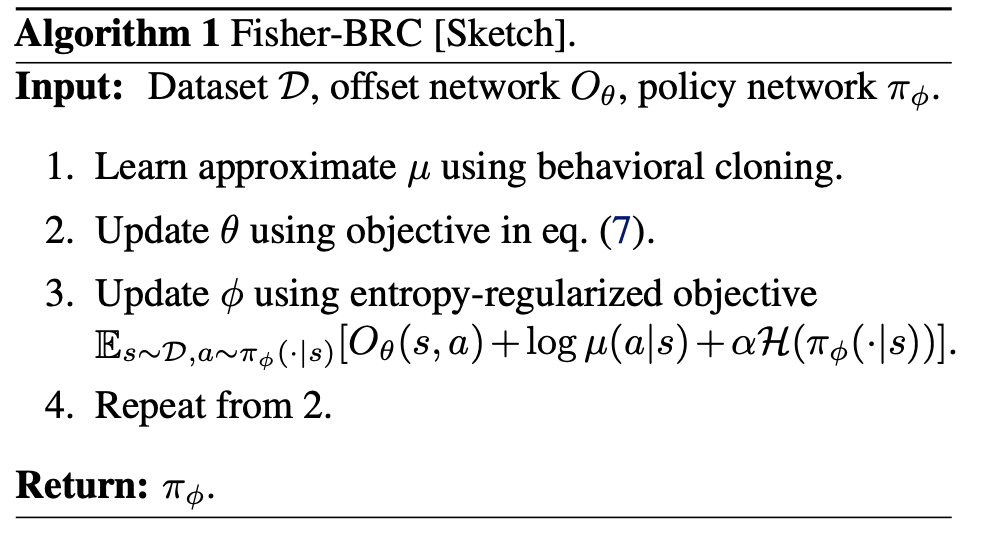

Introduced by Kostrikov et al. in Offline Reinforcement Learning with Fisher Divergence Critic RegularizationFisher-BRC is an actor critic algorithm for offline reinforcement learning that encourages the learned policy to stay close to the data, namely parameterizing the critic as the $\log$-behavior-policy, which generated the offline dataset, plus a state-action value offset term, which can be learned using a neural network. Behavior regularization then corresponds to an appropriate regularizer on the offset term. A gradient penalty regularizer is used for the offset term, which is equivalent to Fisher divergence regularization, suggesting connections to the score matching and generative energy-based model literature.

Source: Offline Reinforcement Learning with Fisher Divergence Critic RegularizationPapers

| Paper | Code | Results | Date | Stars |

|---|

Usage Over Time

Components

| Component | Type |

|

|---|---|---|

| 🤖 No Components Found | You can add them if they exist; e.g. Mask R-CNN uses RoIAlign |