Distributed Methods

Distributed Methods

FastMoE

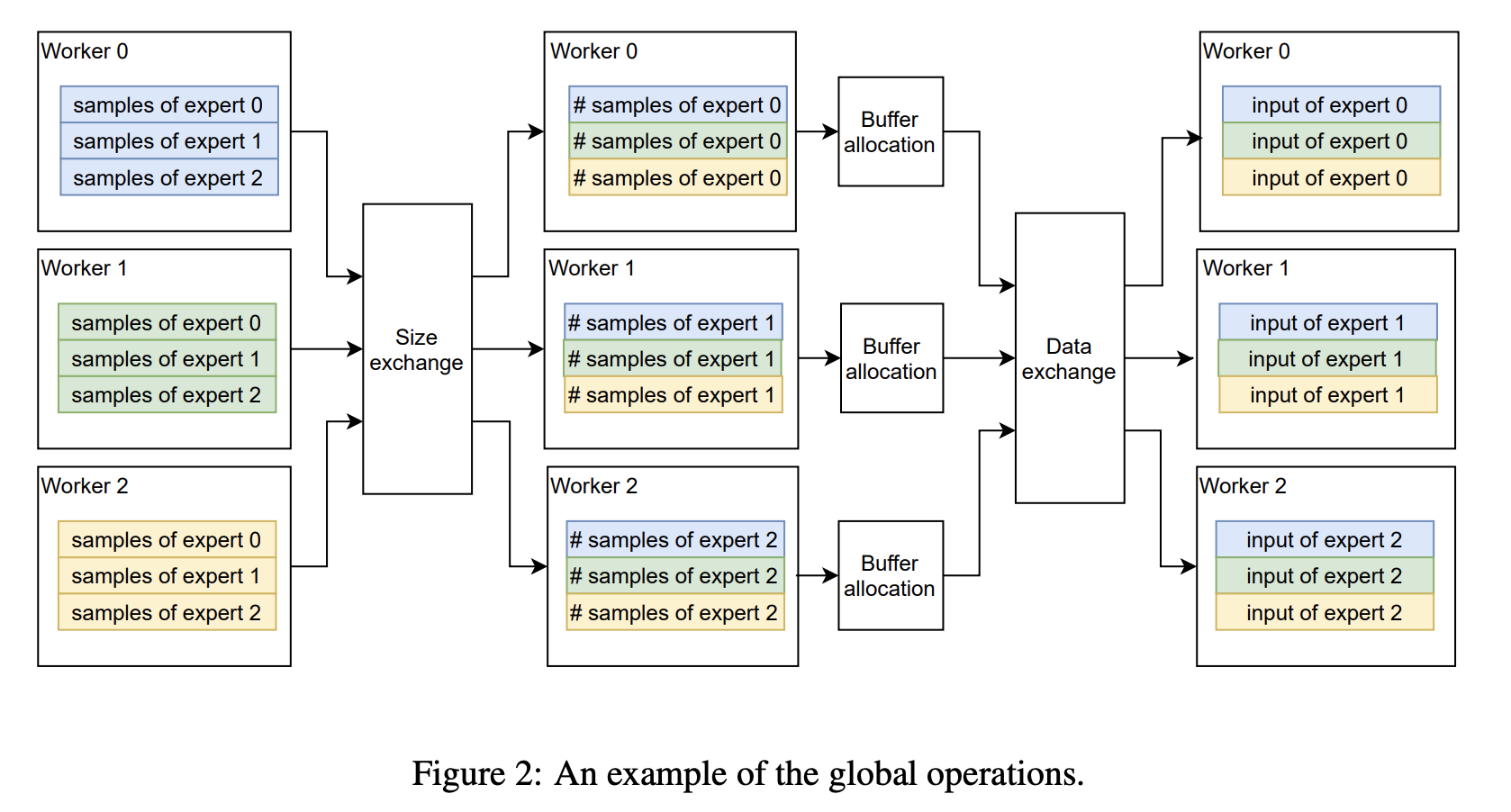

Introduced by He et al. in FastMoE: A Fast Mixture-of-Expert Training SystemFastMoE is a distributed MoE training system based on PyTorch with common accelerators. The system provides a hierarchical interface for both flexible model design and adaption to different applications, such as Transformer-XL and Megatron-LM.

Source: FastMoE: A Fast Mixture-of-Expert Training SystemPapers

| Paper | Code | Results | Date | Stars |

|---|

Usage Over Time

Components

| Component | Type |

|

|---|---|---|

| 🤖 No Components Found | You can add them if they exist; e.g. Mask R-CNN uses RoIAlign |