ELEVATER (Evaluation of Language-augmented Visual Task-level Transfer)

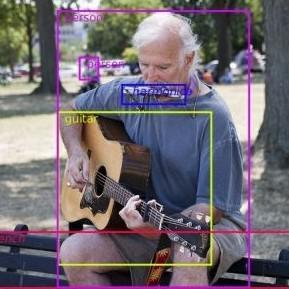

Introduced by Li et al. in ELEVATER: A Benchmark and Toolkit for Evaluating Language-Augmented Visual ModelsThe ELEVATER benchmark is a collection of resources for training, evaluating, and analyzing language-image models on image classification and object detection. ELEVATER consists of:

- Benchmark: A benchmark suite that consists of 20 image classification datasets and 35 object detection datasets, augmented with external knowledge

- Toolkit: An automatic hyper-parameter tuning toolkit; Strong language-augmented efficient model adaptation methods.

- Baseline: Pre-trained language-free and language-augmented visual models.

- Knowledge: A platform to study the benefit of external knowledge for vision problems.

- Evaluation Metrics: Sample-efficiency (zero-, few-, and full-shot) and Parameter-efficiency.

- Leaderboard: A public leaderboard to track performance on the benchmark

The ultimate goal of ELEVATER is to drive research in the development of language-image models to tackle core computer vision problems in the wild.

Benchmarks

Papers

| Paper | Code | Results | Date | Stars |

|---|

Dataset Loaders

No data loaders found. You can

submit your data loader here.

No data loaders found. You can

submit your data loader here.