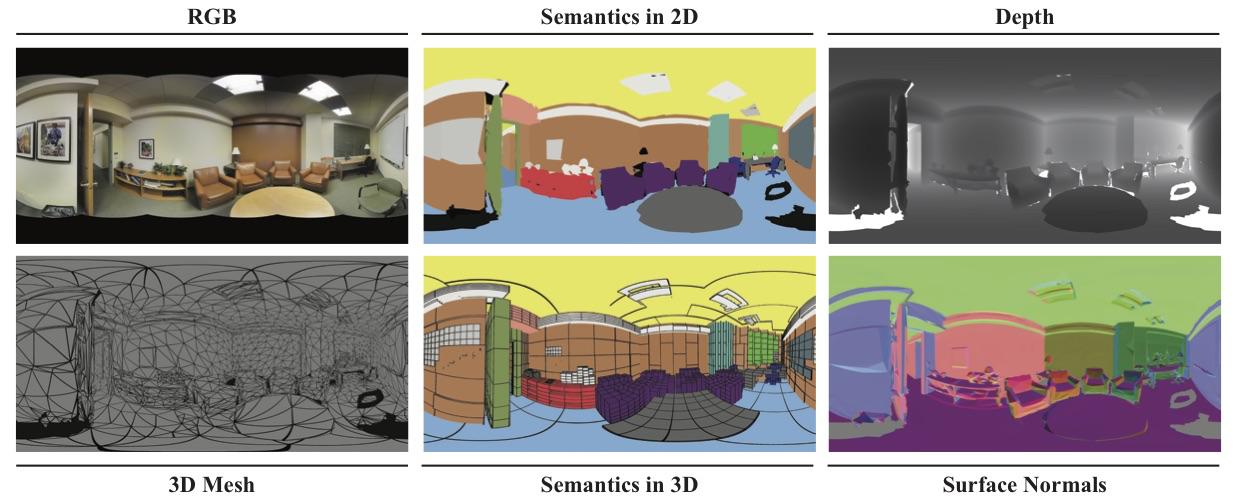

2D-3D-S (2D-3D-Semantic)

Introduced by Armeni et al. in Joint 2D-3D-Semantic Data for Indoor Scene UnderstandingThe 2D-3D-S dataset provides a variety of mutually registered modalities from 2D, 2.5D and 3D domains, with instance-level semantic and geometric annotations. It covers over 6,000 m2 collected in 6 large-scale indoor areas that originate from 3 different buildings. It contains over 70,000 RGB images, along with the corresponding depths, surface normals, semantic annotations, global XYZ images (all in forms of both regular and 360° equirectangular images) as well as camera information. It also includes registered raw and semantically annotated 3D meshes and point clouds. The dataset enables development of joint and cross-modal learning models and potentially unsupervised approaches utilizing the regularities present in large-scale indoor spaces.

Source: https://github.com/alexsax/2D-3D-SemanticsBenchmarks

Papers

| Paper | Code | Results | Date | Stars |

|---|