Search Results for author: Xiaoyang Tan

Found 19 papers, 6 papers with code

HiQA: A Hierarchical Contextual Augmentation RAG for Massive Documents QA

no code implementations • 1 Feb 2024 • Xinyue Chen, Pengyu Gao, Jiangjiang Song, Xiaoyang Tan

As language model agents leveraging external tools rapidly evolve, significant progress has been made in question-answering(QA) methodologies utilizing supplementary documents and the Retrieval-Augmented Generation (RAG) approach.

M$^3$Net: Multilevel, Mixed and Multistage Attention Network for Salient Object Detection

1 code implementation • 15 Sep 2023 • Yao Yuan, Pan Gao, Xiaoyang Tan

To overcome these, we propose the M$^3$Net, i. e., the Multilevel, Mixed and Multistage attention network for Salient Object Detection (SOD).

Ranked #2 on

RGB Salient Object Detection

on HKU-IS

Ranked #2 on

RGB Salient Object Detection

on HKU-IS

ProxyFormer: Proxy Alignment Assisted Point Cloud Completion with Missing Part Sensitive Transformer

1 code implementation • CVPR 2023 • Shanshan Li, Pan Gao, Xiaoyang Tan, Mingqiang Wei

Specifically, we fuse information into point proxy via feature and position extractor, and generate features for missing point proxies from the features of existing point proxies.

Contextual Conservative Q-Learning for Offline Reinforcement Learning

no code implementations • 3 Jan 2023 • Ke Jiang, Jiayu Yao, Xiaoyang Tan

In this paper, we propose Contextual Conservative Q-Learning(C-CQL) to learn a robustly reliable policy through the contextual information captured via an inverse dynamics model.

Robust Action Gap Increasing with Clipped Advantage Learning

no code implementations • 20 Mar 2022 • Zhe Zhang, Yaozhong Gan, Xiaoyang Tan

Advantage Learning (AL) seeks to increase the action gap between the optimal action and its competitors, so as to improve the robustness to estimation errors.

Smoothing Advantage Learning

no code implementations • 20 Mar 2022 • Yaozhong Gan, Zhe Zhang, Xiaoyang Tan

Advantage learning (AL) aims to improve the robustness of value-based reinforcement learning against estimation errors with action-gap-based regularization.

A Cooperative-Competitive Multi-Agent Framework for Auto-bidding in Online Advertising

1 code implementation • 11 Jun 2021 • Chao Wen, Miao Xu, Zhilin Zhang, Zhenzhe Zheng, Yuhui Wang, Xiangyu Liu, Yu Rong, Dong Xie, Xiaoyang Tan, Chuan Yu, Jian Xu, Fan Wu, Guihai Chen, Xiaoqiang Zhu, Bo Zheng

Third, to deploy MAAB in the large-scale advertising system with millions of advertisers, we propose a mean-field approach.

Greedy-Step Off-Policy Reinforcement Learning

no code implementations • 23 Feb 2021 • Yuhui Wang, Qingyuan Wu, Pengcheng He, Xiaoyang Tan

Most of the policy evaluation algorithms are based on the theories of Bellman Expectation and Optimality Equation, which derive two popular approaches - Policy Iteration (PI) and Value Iteration (VI).

Stabilizing Q Learning Via Soft Mellowmax Operator

no code implementations • 17 Dec 2020 • Yaozhong Gan, Zhe Zhang, Xiaoyang Tan

Learning complicated value functions in high dimensional state space by function approximation is a challenging task, partially due to that the max-operator used in temporal difference updates can theoretically cause instability for most linear or non-linear approximation schemes.

Deep Robust Multilevel Semantic Cross-Modal Hashing

no code implementations • 7 Feb 2020 • Ge Song, Jun Zhao, Xiaoyang Tan

Hashing based cross-modal retrieval has recently made significant progress.

SMIX($λ$): Enhancing Centralized Value Functions for Cooperative Multi-Agent Reinforcement Learning

1 code implementation • 11 Nov 2019 • Xinghu Yao, Chao Wen, Yuhui Wang, Xiaoyang Tan

Learning a stable and generalizable centralized value function (CVF) is a crucial but challenging task in multi-agent reinforcement learning (MARL), as it has to deal with the issue that the joint action space increases exponentially with the number of agents in such scenarios.

Truly Proximal Policy Optimization

1 code implementation • 19 Mar 2019 • Yuhui Wang, Hao He, Chao Wen, Xiaoyang Tan

Proximal policy optimization (PPO) is one of the most successful deep reinforcement-learning methods, achieving state-of-the-art performance across a wide range of challenging tasks.

Robust Reinforcement Learning in POMDPs with Incomplete and Noisy Observations

no code implementations • 15 Feb 2019 • Yuhui Wang, Hao He, Xiaoyang Tan

In real-world scenarios, the observation data for reinforcement learning with continuous control is commonly noisy and part of it may be dynamically missing over time, which violates the assumption of many current methods developed for this.

Trust Region-Guided Proximal Policy Optimization

2 code implementations • NeurIPS 2019 • Yuhui Wang, Hao He, Xiaoyang Tan, Yaozhong Gan

We formally show that this method not only improves the exploration ability within the trust region but enjoys a better performance bound compared to the original PPO as well.

A Unified Gender-Aware Age Estimation

no code implementations • 13 Sep 2016 • Qing Tian, Songcan Chen, Xiaoyang Tan

Although leading to promotion of age estimation performance, such a concatenation not only likely confuses the semantics between the gender and age, but also ignores the aging discrepancy between the male and the female.

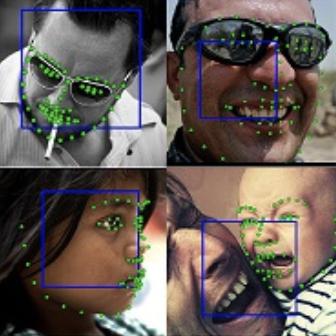

Face Alignment In-the-Wild: A Survey

no code implementations • 15 Aug 2016 • Xin Jin, Xiaoyang Tan

Over the last two decades, face alignment or localizing fiducial facial points has received increasing attention owing to its comprehensive applications in automatic face analysis.

Bayesian Neighbourhood Component Analysis

no code implementations • 8 Apr 2016 • Dong Wang, Xiaoyang Tan

Learning a good distance metric in feature space potentially improves the performance of the KNN classifier and is useful in many real-world applications.

Tri-Subject Kinship Verification: Understanding the Core of A Family

no code implementations • 12 Jan 2015 • Xiaoqian Qin, Xiaoyang Tan, Songcan Chen

One major challenge in computer vision is to go beyond the modeling of individual objects and to investigate the bi- (one-versus-one) or tri- (one-versus-two) relationship among multiple visual entities, answering such questions as whether a child in a photo belongs to given parents.

Unsupervised Feature Learning with C-SVDDNet

no code implementations • 23 Dec 2014 • Dong Wang, Xiaoyang Tan

To address this issue, we propose a SVDD based feature learning algorithm that describes the density and distribution of each cluster from K-means with an SVDD ball for more robust feature representation.

Ranked #23 on

Image Classification

on MNIST

Ranked #23 on

Image Classification

on MNIST