Activation Functions

Activation Functions

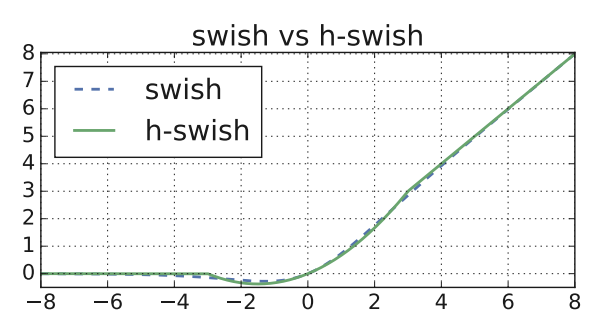

Hard Swish

Introduced by Howard et al. in Searching for MobileNetV3Hard Swish is a type of activation function based on Swish, but replaces the computationally expensive sigmoid with a piecewise linear analogue:

$$\text{h-swish}\left(x\right) = x\frac{\text{ReLU6}\left(x+3\right)}{6} $$

Source: Searching for MobileNetV3Papers

| Paper | Code | Results | Date | Stars |

|---|

Tasks

| Task | Papers | Share |

|---|---|---|

| Image Classification | 12 | 13.04% |

| Object Detection | 9 | 9.78% |

| Classification | 6 | 6.52% |

| Quantization | 5 | 5.43% |

| Decoder | 5 | 5.43% |

| Semantic Segmentation | 4 | 4.35% |

| Bayesian Optimization | 3 | 3.26% |

| Neural Network Compression | 2 | 2.17% |

| Network Pruning | 2 | 2.17% |

ReLU6

ReLU6